Inference

Overview

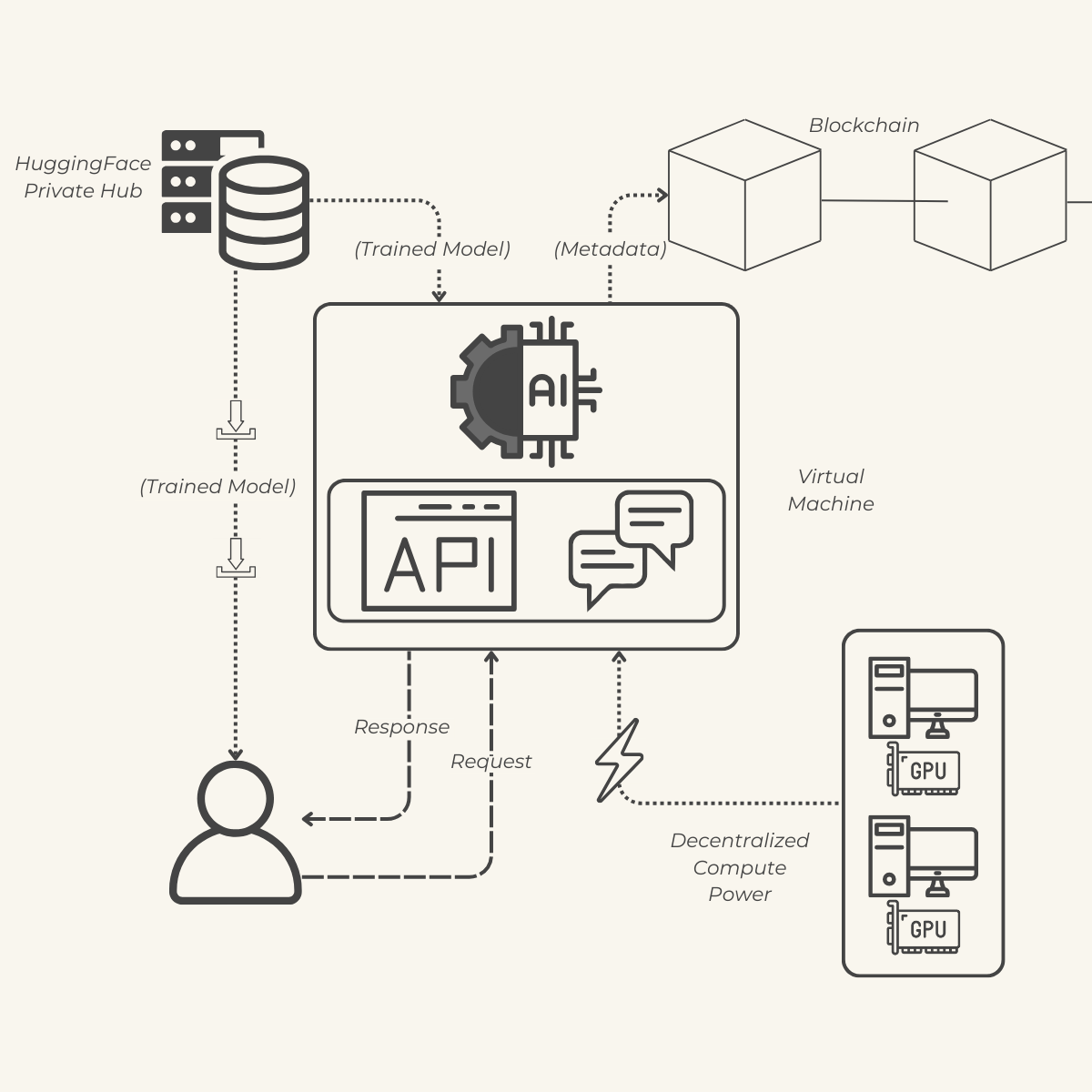

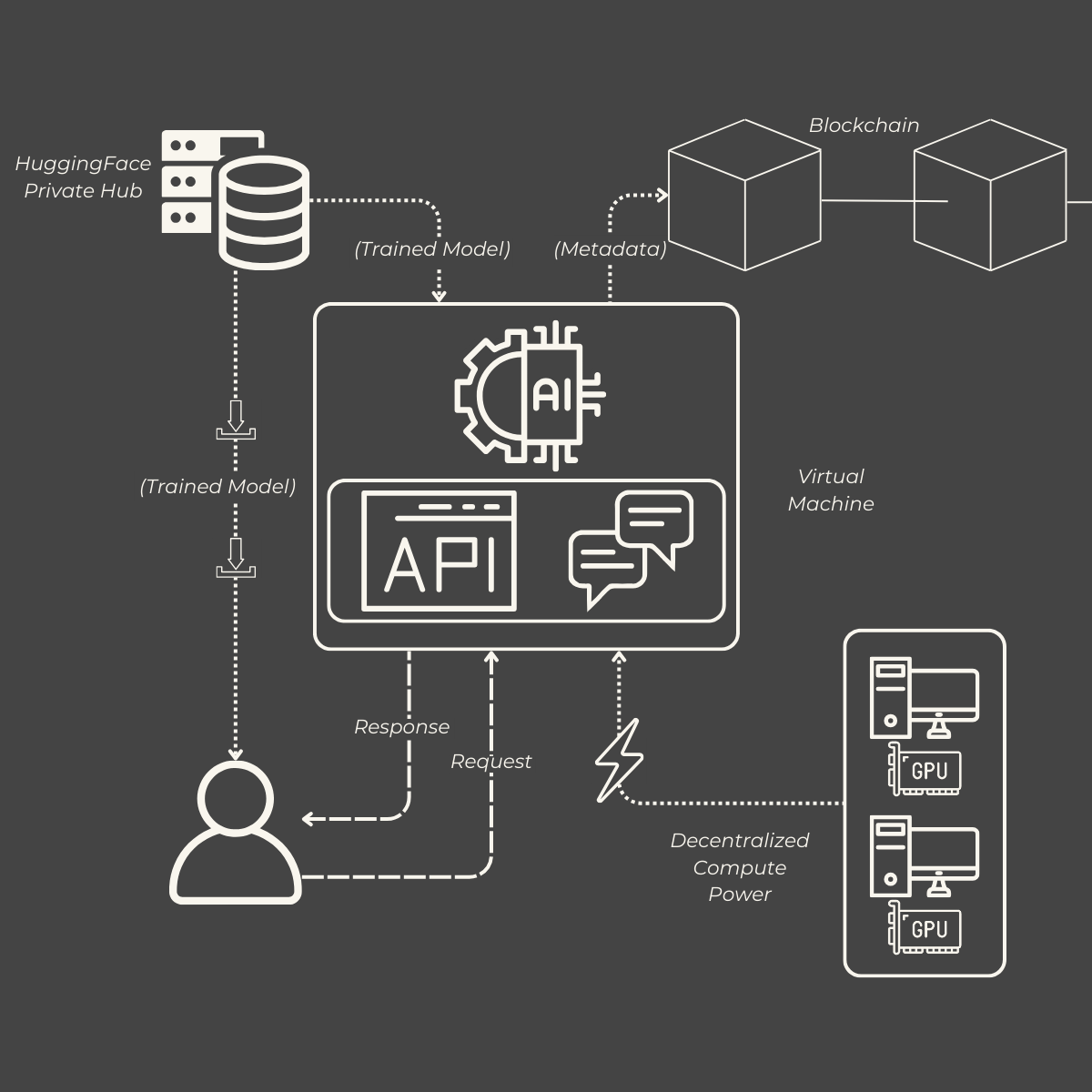

Inference is the process of using a trained model to make predictions or generate responses based on new input data. Our platform provides flexible and efficient ways to perform inference, ensuring seamless integration and real-time interactions. You can interact with your models through two primary methods: API Access and Chatbot.

API Access

Seamless Integration

Our platform offers an API that allows you to interact with your trained models programmatically. Whether you're building applications, services, or integrating with existing systems, the API provides a straightforward way to leverage the power of your models.

Hosted on Decentralized GPUs

The API is hosted on virtual machines powered by decentralized GPUs, similar to our training infrastructure. This ensures scalable and reliable performance, allowing your applications to handle varying loads efficiently.

Supported Models

You can use any model from Hugging Face or UnslothAI with our API. Additionally, you have the flexibility to deploy and interact with the models you have trained on our platform, providing a unified and versatile inference experience.

Chatbot

Real-Time Interactions

In addition to API access, we offer a chatbot interface connected directly to your model. This allows for more intuitive and real-time interactions, enabling you to engage with your model through conversational interfaces effortlessly.

Enhanced User Experience

The chatbot provides an easy-to-use interface for testing and interacting with your models without the need for extensive programming. It's perfect for demonstrations, quick experiments, or integrating conversational AI into your projects.

Supported Models

Similar to the API, the chatbot supports all models from Hugging Face and UnslothAI, as well as any custom models you've trained. This ensures that you can interact with your preferred models in a conversational format seamlessly.

Blockchain Recording

Secure and Transparent Logging

Every interaction with your model, whether through the API or the chatbot, is encrypted and recorded on a blockchain. This ensures that all inference activities are secure, transparent, and tamper-proof.

Metadata Storage

Each inference request logs essential metadata, including:

- DateTime: The timestamp of when the inference request was made.

- GPU Used: Details about the GPU resources utilized for the inference.

- GPU Provider: The name or identifier of the provider supplying the GPU resources.

- GPU Price/Hour: The cost per hour for using the GPU resources.

- Model Used: Information about the specific model deployed.

- Model Parameters: Key parameters used for the model during inference (e.g., temperature, top_p, max_tokens).

- Prompt: The input provided for the inference.

- Prompt Instructions: Any specific instructions or context provided with the prompt.

- Response: The output generated by the model.

- Sender Address: The address initiating the inference request.

Data Integrity and Privacy

All metadata is encrypted to protect sensitive information. The use of blockchain technology guarantees that the records are immutable and verifiable, fostering trust and accountability in all inference operations.

Model Export

Flexibility and Control

We understand that users may need to utilize their models outside our platform. Our model export feature allows you to download your trained models, giving you the freedom to deploy them in other environments or integrate them into different applications as needed.

Supported Formats

Exported models are provided in standard formats compatible with various machine learning frameworks, ensuring easy integration and deployment across different platforms and services.

Custom Usage

Whether you're migrating to another service, conducting further research, or deploying your model in a custom environment, the export feature provides the flexibility to use your models exactly as you envision.

Two Ways to Perform Inference

1. API

- Use Cases: Application integration, automated services, backend processing.

- Advantages: Scalability, programmability, suitable for high-volume requests.

2. Chatbot

- Use Cases: Real-time interactions, conversational interfaces, demonstrations.

- Advantages: User-friendly, no coding required, ideal for interactive use cases.

Both methods support all models from Hugging Face and UnslothAI, as well as any custom models you've trained on the platform. This dual approach ensures that you have the flexibility to choose the best method for your specific needs.

Conclusion

Our inference infrastructure is designed to provide flexibility, security, and efficiency. By offering both API and chatbot interfaces, supporting a wide range of models, and ensuring all interactions are securely recorded on the blockchain, we empower you to leverage your trained models effectively and confidently.